IRIS is funded by the European Commission under the FP7 Marie Curie action,

Industry Academia Partnerships and Pathways (IAPP),

grant agreement nš 610986

IRIS is funded by the European Commission under the FP7 Marie Curie action,

Industry Academia Partnerships and Pathways (IAPP),

grant agreement nš 610986

This first part of the project consists in studying and defining a profile based on the user pathologies and case scenarios , as well as, developing the technical platforms for the final phase of the project. This encompasses the development of input (speech, silent speech, gesture, tactile/haptic devices) and output (animated characters, pictograms and personalized synthetic voices) communication modalities and essential modules of the platform such as the management of these modalities and user authentication.

In objective 2, we first need to establish a framework for an objective evaluation of the previously developed work. This evaluation will be based on biological and psychophysical measures such as Electromyography (EMG), Electro-dermal Activity (EDA), eye tracking, optical brain imaging (fNIR), etc. Usability evaluation will be a continuous process along the entire project in order to guide development and assess user performance using the proposed systems in virtual and real world situations.

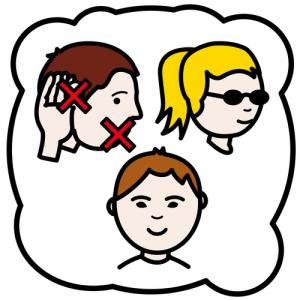

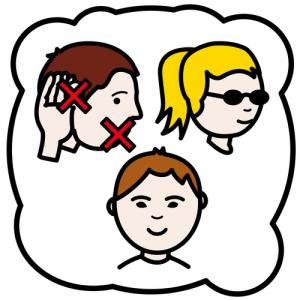

WP1 will build the user model through the

definition of a user profile including information about the person's cognitive level, sensorial and physical

disabilities. The user profile will be filled initially during the user registration, through a set of simple tests

in order to gather information about the three axes (cognitive, sensorial and physical). As the user's

limitations have a great impact on the interaction, the user profile must be adapted continuously to the

user's impairments and attempt to define how the platform should react to the user inputs and how to

display the output messages in the most comprehensible way for the user.

WP1 will build the user model through the

definition of a user profile including information about the person's cognitive level, sensorial and physical

disabilities. The user profile will be filled initially during the user registration, through a set of simple tests

in order to gather information about the three axes (cognitive, sensorial and physical). As the user's

limitations have a great impact on the interaction, the user profile must be adapted continuously to the

user's impairments and attempt to define how the platform should react to the user inputs and how to

display the output messages in the most comprehensible way for the user.

This WP will provide a set of natural interfaces to be used in a multimodal way.

MSFT, leader of this WP, will develop a multimodal silent speech interface in collaboration with

UAVR based on Visual Speech Recognition. MSFT will also explore the use of gesture as an

alternative modality for Portuguese. METU will provide mentoring support for denoising and source

localization for higher quality speech recordings to enhance the performance of speech recognition and speaker identification. METU will also explore the integration of tactile/haptic devices as alternative or parallel interfaces. UAVR, based on its wide experience on the subject will provide valuable information about the Human Speech production model using technologies such as Real-time Magnetic Resonance Imaging, encompassing data acquisition and data processing and analysis, paving the way for the development of robust speech and silent speech interfaces by providing data for articulatory speech characterization and for validation and improved understanding of different data acquisition modalities such as Electromyography (CITE). UNIZAR will provide acoustic confidence measures for acoustic model adaptation for users with speech impairments.

This WP will provide a set of natural interfaces to be used in a multimodal way.

MSFT, leader of this WP, will develop a multimodal silent speech interface in collaboration with

UAVR based on Visual Speech Recognition. MSFT will also explore the use of gesture as an

alternative modality for Portuguese. METU will provide mentoring support for denoising and source

localization for higher quality speech recordings to enhance the performance of speech recognition and speaker identification. METU will also explore the integration of tactile/haptic devices as alternative or parallel interfaces. UAVR, based on its wide experience on the subject will provide valuable information about the Human Speech production model using technologies such as Real-time Magnetic Resonance Imaging, encompassing data acquisition and data processing and analysis, paving the way for the development of robust speech and silent speech interfaces by providing data for articulatory speech characterization and for validation and improved understanding of different data acquisition modalities such as Electromyography (CITE). UNIZAR will provide acoustic confidence measures for acoustic model adaptation for users with speech impairments.

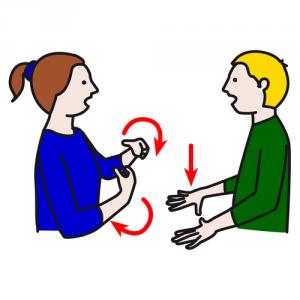

This WP will provide a Visual Speech Synthesis platform. FIM will be responsible for an

automatic method of facial animation playback of human and animated characters based in speech

recognition and for the integration of this system into the IRIS platform. In collaboration with UAVR and

MSFT they will improve their system with a coarticulation model and personalized synthetic voices. MSFT

will develop a platform for creating personalized synthetic voices (a synthetic voice based on the user voice

font). This way the user will have the possibility of using a familiar (or even his own) voice to use the

system. MSFT will also explore the creation of personalized synthetic voices for users in risk of losing their

ability to produce audible speech in the future. MSFT participation will be complemented by the

development of a coarticulation model for the Visual Speech Synthesis system built by FIM. This will be

performed in collaboration with UAVR and will profit from existing experience concerning the articulatory

characterization of European Portuguese. METU will explore the application of Spatial Audio Object Coding

(SAOC) to the synchronization of audio-visual objects, in particular between the animated characters

(developed by FIM) and speech. METU will provide mentoring on GPU units. UNIZAR will be responsible for

introducing the pictograms as system output for those users requiring this communication channel.

This WP will provide a Visual Speech Synthesis platform. FIM will be responsible for an

automatic method of facial animation playback of human and animated characters based in speech

recognition and for the integration of this system into the IRIS platform. In collaboration with UAVR and

MSFT they will improve their system with a coarticulation model and personalized synthetic voices. MSFT

will develop a platform for creating personalized synthetic voices (a synthetic voice based on the user voice

font). This way the user will have the possibility of using a familiar (or even his own) voice to use the

system. MSFT will also explore the creation of personalized synthetic voices for users in risk of losing their

ability to produce audible speech in the future. MSFT participation will be complemented by the

development of a coarticulation model for the Visual Speech Synthesis system built by FIM. This will be

performed in collaboration with UAVR and will profit from existing experience concerning the articulatory

characterization of European Portuguese. METU will explore the application of Spatial Audio Object Coding

(SAOC) to the synchronization of audio-visual objects, in particular between the animated characters

(developed by FIM) and speech. METU will provide mentoring on GPU units. UNIZAR will be responsible for

introducing the pictograms as system output for those users requiring this communication channel.

Experiments with Pictograms:

The work carried out

includes careful analysis of what modalities to fuse, when and how, in order to provide adequate response

to users' goals and context, striving for additional robustness in situations, such as noisy environments, or

where privacy issues and existing disabilities might hinder single modality interaction. The envisaged fusion

engine should not provide a generic/versatile approach to the fusion problem but a very much focused

approach based on the modalities used and defined application scenarios aiming for simplicity.

The work carried out

includes careful analysis of what modalities to fuse, when and how, in order to provide adequate response

to users' goals and context, striving for additional robustness in situations, such as noisy environments, or

where privacy issues and existing disabilities might hinder single modality interaction. The envisaged fusion

engine should not provide a generic/versatile approach to the fusion problem but a very much focused

approach based on the modalities used and defined application scenarios aiming for simplicity.

In the recent years a great advance has been achieved in the field of personal identification based on voice

and multimodal biometrics. Nowadays, the use of natural ways of interacting with electronic devices must

also include useful and easy methods to verify the identity of each one of the allowed users of this kind of

platforms in order to provide the required access security to personal information and privacy.

In the recent years a great advance has been achieved in the field of personal identification based on voice

and multimodal biometrics. Nowadays, the use of natural ways of interacting with electronic devices must

also include useful and easy methods to verify the identity of each one of the allowed users of this kind of

platforms in order to provide the required access security to personal information and privacy.

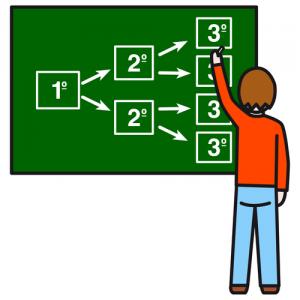

In this work package the goal is to provide a stable platform that integrates and represents the work

performed in the previous WPs. The platform will be deployed in at least two partners. This WP will not

only be about integration but will also aim at transferring the knowledge acquired so far among the

partners.

In this work package the goal is to provide a stable platform that integrates and represents the work

performed in the previous WPs. The platform will be deployed in at least two partners. This WP will not

only be about integration but will also aim at transferring the knowledge acquired so far among the

partners.

First, in a series of

subjective experiments in the lab environment, it will explore the development of a set of appropriate noninvasive

biological and psychophysical measures, including eye tracking measures that aim to measure the

attention of and the cognitive load on the user during the course of interaction with the interface, and the

fNIR (functional near-infrared) spectroscopy for the purpose of functional neuroimaging, aiming at

measuring the brain activity, in particular cognitive load on the user through hemodynamic responses

associated with neural behavior. Interaction analysis will be used as the complementary methodology for

the development of the measures. Second, based on the user profile definition developed in WP1, we

will explore the development of Quality of Experience (QoE) models using the objective metrics in order to

predict QoE in the future without further subjective experiments, and the development of the methods for

creating the mappings between user profiles, preferences and QoE and carrying out network analysis to

determine subgroups of users according to the similarities of their QoEs.

First, in a series of

subjective experiments in the lab environment, it will explore the development of a set of appropriate noninvasive

biological and psychophysical measures, including eye tracking measures that aim to measure the

attention of and the cognitive load on the user during the course of interaction with the interface, and the

fNIR (functional near-infrared) spectroscopy for the purpose of functional neuroimaging, aiming at

measuring the brain activity, in particular cognitive load on the user through hemodynamic responses

associated with neural behavior. Interaction analysis will be used as the complementary methodology for

the development of the measures. Second, based on the user profile definition developed in WP1, we

will explore the development of Quality of Experience (QoE) models using the objective metrics in order to

predict QoE in the future without further subjective experiments, and the development of the methods for

creating the mappings between user profiles, preferences and QoE and carrying out network analysis to

determine subgroups of users according to the similarities of their QoEs.

This WP must, first of all, result in a set of

recommendations (guidelines) extracted from the relevant literature concerning usability in multimodal

interfaces design which the remaining partners can use as a reference when conducting their design and

development tasks. The evaluation scenarios and features to evaluate should then be identified and the

evaluation protocols defined, tested and applied. Considering the unpredictable nature of some of the

solutions proposed on the other WPs, evaluation scenarios and protocols might need readjustments along

the way. The framework that will be provided after WP7 will allow gathering objective data to characterize

user attention, reactions and cognitive load and will support its integration in the usability studies.

This WP must, first of all, result in a set of

recommendations (guidelines) extracted from the relevant literature concerning usability in multimodal

interfaces design which the remaining partners can use as a reference when conducting their design and

development tasks. The evaluation scenarios and features to evaluate should then be identified and the

evaluation protocols defined, tested and applied. Considering the unpredictable nature of some of the

solutions proposed on the other WPs, evaluation scenarios and protocols might need readjustments along

the way. The framework that will be provided after WP7 will allow gathering objective data to characterize

user attention, reactions and cognitive load and will support its integration in the usability studies. HCI experts participating in the project will prepare satisfaction questionnaires and conduct the reviews

based on performance analyses, in particular, efficiency and effectivity that will be measured through

reaction times, gesture analysis where applicable, and eye tracking. The eye tracking data will be collected

by a non-intrusive eye tracker. Where applicable, the brain imaging data will be collected by fNIRS optical

imaging facility, which is also a non-intrusive data collection equipment. The experimental investigations

will aim at developing a Quality of Experience (QoE) model based on objective measures.

HCI experts participating in the project will prepare satisfaction questionnaires and conduct the reviews

based on performance analyses, in particular, efficiency and effectivity that will be measured through

reaction times, gesture analysis where applicable, and eye tracking. The eye tracking data will be collected

by a non-intrusive eye tracker. Where applicable, the brain imaging data will be collected by fNIRS optical

imaging facility, which is also a non-intrusive data collection equipment. The experimental investigations

will aim at developing a Quality of Experience (QoE) model based on objective measures. The participants profile and their level of impairment is

going to be defined in WP1 and it is foreseen to have a psychologist reviewing the experimental protocols

and monitoring these studies. Regular pauses and short duration sessions, for example, will be considered

whenever deemed necessary, so that no mental/physical stress is caused on participants. This is also

valuable, in the methodological point of view, since a relaxed and stress free participant is a very important

condition to attain better evaluation results. The conducted studies will also be previously approved by

ethical committees of each country and comply with national and EU legislation, and FP7 rules.

The participants profile and their level of impairment is

going to be defined in WP1 and it is foreseen to have a psychologist reviewing the experimental protocols

and monitoring these studies. Regular pauses and short duration sessions, for example, will be considered

whenever deemed necessary, so that no mental/physical stress is caused on participants. This is also

valuable, in the methodological point of view, since a relaxed and stress free participant is a very important

condition to attain better evaluation results. The conducted studies will also be previously approved by

ethical committees of each country and comply with national and EU legislation, and FP7 rules.

| Country | Activity | Description | Audience | Indented Date |

| Portugal | UAVR Journal and Newsletter | Dissemination of relevant content from IRIS in the UAVR online journal and newsletter. Dissemination also performed to the mass media (e.g., Lusa, the Portuguese news agency) | Academia and general public | Whenever relevant |

| University Open Day | UAVR opens doors to students across the country and organizes activities to show its research outputs including the different outcomes from IRIS. | General public (strong incidence in students of all ages) | Every year after the first | |

| IRIS Demonstration Event | Allow the general public to experience the different applications proposed by IRIS and collect informal feedback. | General public (with some focus on elderly) | Close to the end of IRIS | |

| E-newsletter | A semestral e-newsletter will be published online with the news and advances achieved during that time. | General public | Every 6 months | |

| MSFT Open Day | MSFT opens door to students across the country to their premises. In these tours a demonstration of the multiple prototypes developed in IRIS will be included. | National students of all ages | Every year after the second | |

| Turkey | Workshop Day | Workshop for general public about the outcomes and possible implications of the project. | Broadcasted through METU Webinar system and made available for all other universities | Close to the end of IRIS |

| Press release | Dissemination of IRIS to the mass media via the METU Press Coordinator Office | General public | Whenever relevant | |

| METU Open Day | In the open day, METU introduces research projects to national students of all ages. A demonstration of an IRIS prototype will be included into this activity. | National students of all ages | Close to the end of IRIS | |

| CEBIT Bilisim Eurasia Exposition, Istanbul | A leading Eurasian IT, Technology and Communication Platform that brings ICT companies, government and media in the Eurasian region together in an exposition. | General public | Close to the end of IRIS | |

| Spain | Engineering and Architecture week | Spread to the society, especially to primary and secondary school students, but also to the general public (university or not) research and teaching activities. Includes workshops, contests, 3D video projections, lectures, demonstrations, exhibitions, etc. | General public (Kids to elder people and all the members of the university community) | Every year after the first |

| University open day and welcome day | General dissemination mainly focus on engineering students and future students. | General public with focus on students | Every year after the first | |

| Public talks, public demonstrations and articles in newspapers | Allow a general dissemination of IRIS to general public through radio and TV talks, demonstrations in trade fairs and collaboration in newspapers. | General public | >Whenever relevant |

This WP will ensure that all planned objectives are successfully met during the lifetime of the project. It includes: inception, specification and planning; project quality control; financial administration; coordination of partners.

This WP will ensure that all planned objectives are successfully met during the lifetime of the project. It includes: inception, specification and planning; project quality control; financial administration; coordination of partners.